Building a Streaming Music Service with Phoenix and Elixir

Aaron D. Parks

April 11, 2022

Aaron D. Parks

April 11, 2022

I thought it would be nice to make a streaming music service focused on bringing lo-fi artists and listeners together. Early on, I put together a series of prototypes to explore with a small group of listeners and artists. Since this is a technical article, I'll jump right into the requirements we arrived at, though I'd love to also do an article on the strategies and principles that guided our exploration.

We liked a loose retro-computing aesthetic with a looping background that changed from time to time. We preferred having every listener hear the same song and see the same background at the same time. And we liked the idea of sprinkling some “bumpers” or other DJ announcements between the songs.

During the prototyping phase, I found that updating the src

attribute of an audio element at the end of each song provided a

workable streaming experience and was very straightforward to implement.

I could likewise update the background loops when they had been running long

enough.

The front end is probably worth an article or two on its own, but the gist is simple enough. Upon loading, it makes a request to the back end for the current audio and current background. The back end returns an URL for each along with metadata to show. It also returns how much time remains before the audio ends or the background should change. The front end plays the URLs and shows their metadata. It sets timers and requests the new current audio or background when the time is up.

The job of the back end, then, is straightforward: it will assemble an unending playlist of songs, bumpers, and backgrounds from what it has on hand.

When the front end asks for the current audio, the back end checks if there

is already an audio item playing and provides it if so.

If there's not already an audio item playing — or if it's close enough to the

end that it would be better for the front end to wait a moment and start

playing the next audio item — it figures out what the next item should be

and provides that.

(from the LofiLimo.Audio module)

def current_play(now) do

threshold = fetch_option!(:next_threshold)

{:ok, play} =

Repo.transaction(fn ->

Ecto.Adapters.SQL.query!(Repo, "LOCK audio_plays")

last = Repo.one(Play.last())

if is_nil(last) or Play.remaining(last, now) < threshold do

next_play(now, last)

else

last

end

end)

play

end

To prevent more than one "next item" from being selected, I wrapped the decision in a table lock. This was quick and easy, but upon reflection I think a GenServer that caches the current item would be a better way to go. It would save the table lock by serializing concurrent requests through its message loop and it would also save a database query for most requests (the ones that don't cause a next item to be selected).

The next audio item to play might be a DJ announcement (if there hasn't been

one in quite a while) or a song.

The starting time for the next play is usually the end of the previous item,

but the very first play should start immediately.

I add a small gap between songs to accommodate differences in how long it

takes each front end instance to play out the audio.

(from the LofiLimo.Audio module)

defp next_play(now, last) do

last_ended = if is_nil(last), do: now, else: Play.ended(last)

gap = fetch_option!(:gap)

started =

[now, last_ended]

|> Enum.max(DateTime)

|> DateTime.add(gap, :millisecond)

|> DateTime.truncate(:second)

params = %{started: started}

item =

if time_for_announcement(now) do

next_announcement_item()

else

next_song_item()

end

%Play{}

|> Play.changeset(params, item)

|> Repo.insert!()

|> Repo.preload(item: :media)

end

When selecting the next song or announcement to play, I didn't want to use

a truly random selection.

Instead, I wanted to pick the next item the way a human might: randomly, but

only from among the least-recently-played half of songs or announcements.

(from the LofiLimo.Audio module)

defp next_song_item do

song = Repo.one!(Song.next())

item = Repo.get(Item, song.item_id)

Map.put(item, :song, song)

end

(from the LofiLimo.Audio.Song module)

def next do

from(s in __MODULE__,

join: i in assoc(s, :item),

left_join: p in assoc(i, :plays),

group_by: s.id,

order_by: [over(ntile(2), :window), fragment("random()")],

windows: [window: [order_by: [asc_nulls_first: max(p.started)]]],

limit: 1

)

end

Selecting backgrounds is mostly the same, but simplified a little since

there is no equivalent to an announcement for the backgrounds.

There is a little twist in that a background doesn't have a natural amount of

time it should be shown, so I select one randomly from within a reasonable

range.

(from the LofiLimo.Backgrounds module)

def current_play(now) do

threshold =

:lofi_limo

|> Application.fetch_env!(__MODULE__)

|> Keyword.fetch!(:next_threshold)

{:ok, play} =

Repo.transaction(fn ->

Ecto.Adapters.SQL.query!(Repo, "LOCK audio_plays")

last = Repo.one(Play.last())

if is_nil(last) or Play.remaining(last, now) < threshold do

next_play(now, last)

else

last

end

end)

play

end

defp next_play(now, last) do

background = Repo.one!(Background.next())

last_ended = if is_nil(last), do: now, else: Play.ended(last)

started =

[now, last_ended]

|> Enum.max(Date)

|> DateTime.truncate(:second)

duration = Enum.random(180_000..480_000)

%Play{}

|> Play.changeset()

|> Changeset.put_assoc(:background, background)

|> Changeset.put_change(:started, started)

|> Changeset.put_change(:duration, duration)

|> Repo.insert!()

|> Repo.preload(background: :media)

end

(from the LofiLimo.Backgrounds.Background module)

def next do

from(b in __MODULE__,

left_join: p in assoc(b, :plays),

group_by: b.id,

order_by: [over(ntile(2), :window), fragment("random()")],

windows: [window: [order_by: [asc_nulls_first: max(p.started)]]],

limit: 1

)

end

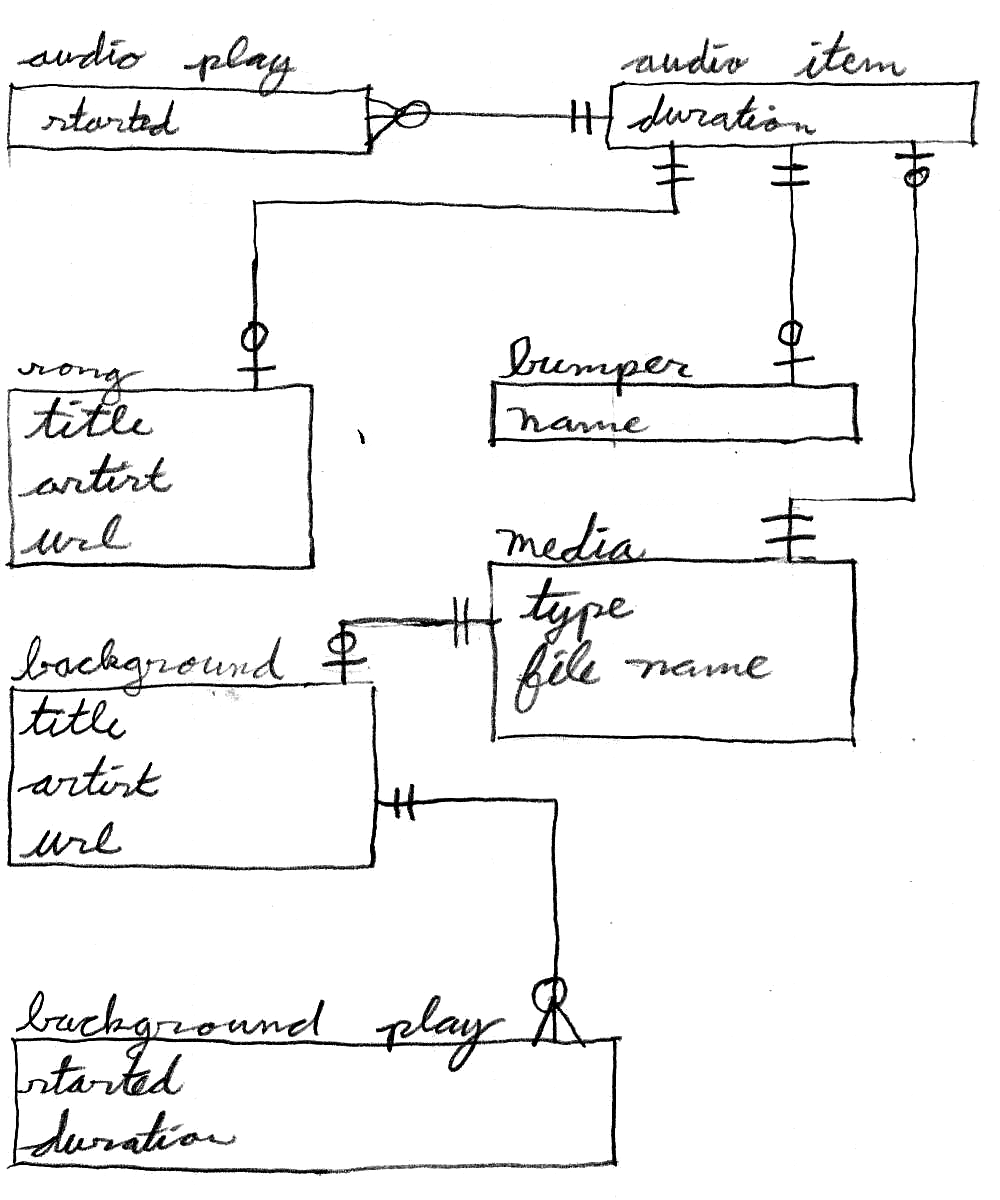

There are a couple of small wrinkles in the data model. One is that bumpers

(or other announcements) have different properties than a song. To smooth

this out, I abstracted both through an “audio item.” The other wrinkle is

that both backgrounds and audio items have properties related to their

associated binary data. I factored these properties out to “media.”

Phoenix makes it easy to render and send a JSON response.

Instead of writing an EEX template for each endpoint, I added a

render/2 function clause for each endpoint to my view module.

These clauses return standard Elixir data structures which Phoenix renders

to their JSON equivalents.

The current audio is has a structure which depends on whether it is a bumper

or a song.

The front end uses this difference to adjust its metadata display.

(from the LofiLimoWeb.PlayerView module)

def render("current_audio.json", %{play: play, remaining: remaining}) do

cond do

match?(%LofiLimo.Audio.Bumper{}, play.item.bumper) ->

bumper(play.item, remaining)

match?(%LofiLimo.Audio.Song{}, play.item.song) ->

song(play.item, remaining)

end

end

def render("current_background.json", %{play: play, remaining: remaining}) do

%{

file_url: Media.file_url(play.background.media),

remaining: remaining,

artist: play.background.artist,

title: play.background.title,

url: play.background.url

}

end

defp bumper(item, remaining) do

%{

file_url: Media.file_url(item.media),

remaining: remaining

}

end

defp song(item, remaining) do

%{

file_url: Media.file_url(item.media),

remaining: remaining,

artist: item.song.artist,

title: item.song.title,

url: item.song.url

}

end

(from the LofiLimoWeb.PlayerController module)

def current_audio(conn, _params) do

now = DateTime.utc_now()

play = LofiLimo.Audio.current_play(now)

LofiLimo.Audio.increment_play_gets(play)

remaining = LofiLimo.Audio.Play.remaining(play, now)

render(conn, :current_audio, play: play, remaining: remaining)

end

def current_background(conn, _params) do

now = DateTime.utc_now()

play = LofiLimo.Backgrounds.current_play(now)

remaining = LofiLimo.Backgrounds.Play.remaining(play, now)

render(conn, :current_background, play: play, remaining: remaining)

end

I hope this article has given you some ideas about how you could use Phoenix and Elixir in your own projects. To keep the length of this article reasonable, I tried to stick to the very core of the program. If you'd be interested to see how some of the supporting work is done, please drop me a line. I could write about how media is imported and stored, using a content distribution network, analytics, MP3 parsing, you name it!

Comments and discussion on this article are available at Lobsters and Hacker News.

If you'd like to really see how sausage gets made, I have some videos of the development process in a playlist on my YouTube channel.

If you have any questions, comments, or corrections please don't hesitate to drop me a line.

Aaron D. Parks